… for Codecs & Media

Tip #1720: New! Framing Options in Compressor

Larry Jordan – LarryJordan.com

New compression options support more social media platforms.

New with the 4.5.3 update to Compressor are new compression settings which simplify cropping and compressing square and vertical media. Here’s how.

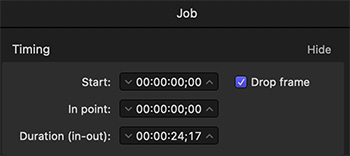

- Import a video clip for compression and apply a compression setting, for example, Apple Devices > Apple Devices HD (Best Quality).

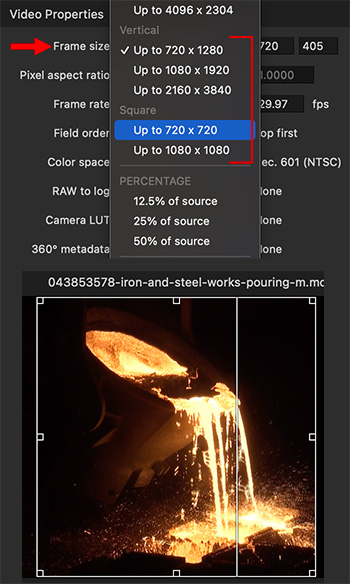

- Go to Video > Frame Size and choose one of the new vertical or square size options (red arrow and bracket).

- In the Viewer, drag one of the small cropping squares along the edge and watch the frame size numbers in the Inspector until you get to the frame size you want.

- By default, Compressor tries to preserve the entire frame. Dragging one of the cropping squares allows you to determine which part of the image to crop.

- Click in the middle of the selection square in the Viewer and drag to reposition which part of the image will be cropped.

EXTRA CREDIT

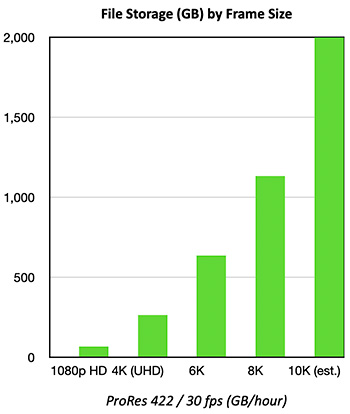

You can use this technique with other codecs, for example, ProRes 422. Compression options will vary by codec and source clip.