… for Random Weirdness

Tip #730: Tips to Control Depth of Field

Larry Jordan – LarryJordan.com

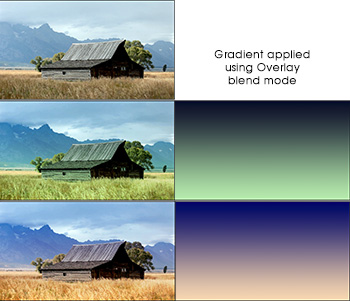

Big aperture = small f/number = small depth of field

This article, written by Brian Auer, first appeared in PictureCorrect.com. This is an excerpt.

Depth of field (DOF) is one of the most important factors in determining the look and feel of an image. You should know how to utilize this effect.

Depth of field refers to the distance (depth) from the focus point that a photo will be sharp, while the rest becomes blurry. A large, or wide, depth of field results in much of the photo in focus. A small, or narrow, depth of field results in much more of the photo out of focus.

There are four main factors that control depth of field: lens aperture, lens focal length, subject distance, and sensor size. Your sensor is pretty well set, so you won’t have much luck changing that. Your focal length and distance to the subject are usually determined by your choice of composition. So the lens aperture is your primary control over depth of field.

Before I get to the tips, let’s get a few things straight:

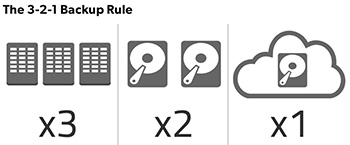

- BIG APERTURE = SMALL F-NUMBER = SMALL DEPTH OF FIELD

- SMALL APERTURE = BIG F-NUMBER = BIG DEPTH OF FIELD

Large apertures (small f-numbers) cause a narrow DOF, while small apertures (large f-numbers) cause a wide DOF.

If you want to bring an entire scene into focus and keep it sharp, use a small aperture. But be careful not to go too small. Lens sharpness starts to deteriorate at the smallest apertures.

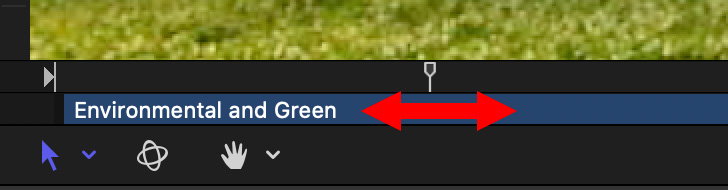

The DOF extends behind and in front of the point of focus. It usually extends further behind than in front, though. So keep this in mind when choosing your focus point; you’ll want to focus about a third of the way into the scene rather than halfway.

Your focal length is usually determined by your choice of composition, but you should know how it affects your depth of field. Longer focal lengths (200mm) have less depth of field than shorter focal lengths (35mm).

EXTRA CREDIT

The link at the top has videos illustrating these concepts, as well as more information.