… for Codecs & Media

Tip #954: VP9 Refresher

Larry Jordan – LarryJordan.com

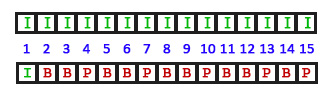

YouTube uses the VP9 codec exclusively for 4K HDR media.

Apple introduced support for the VP9 codec in the fourth beta of macOS Big Sur, specifically for Safari. Here’s a quick refresher.

According to Wikipedia:

VP9 is an open and royalty-free video coding format developed by Google. It is supported in Windows, Android and Linux, but not Mac or iOS.

VP9 is the successor to VP8 and competes mainly with MPEG’s High Efficiency Video Coding (HEVC/H.265).

In contrast to HEVC, VP9 support is common among modern web browsers with the exception of Apple’s Safari (both desktop and mobile versions). Android has supported VP9 since version 4.4 KitKat.

An offline encoder comparison between libvpx, two HEVC encoders and x264 in May 2017 by Jan Ozer of Streaming Media Magazine, with encoding parameters supplied or reviewed by each encoder vendor (Google, MulticoreWare and MainConcept respectively), and using Netflix’s VMAF objective metric, concluded that “VP9 and both HEVC codecs produce very similar performance” and “Particularly at lower bitrates, both HEVC codecs and VP9 deliver substantially better performance than H.264”.

Here’s a link for more information.

.jpg)