… for Codecs & Media

Tip #1043: Comparing HDR Grayscale to SDR

Larry Jordan – LarryJordan.com

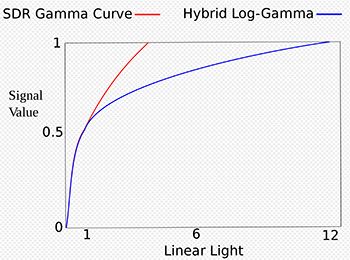

HDR HLG matches SDR shadows and midtones, but adds more highlights.

As I was researching my recent webinar on “New Features in Adobe Premiere Pro,” I came across an interesting graph that compares HDR HLG grayscale values with SDR.

We are all familiar with the grayscale values in SDR (Standard Dynamic Range) media. It’s the Rec. 709 HD footage we work with on a daily basis.

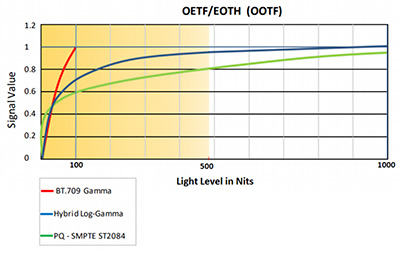

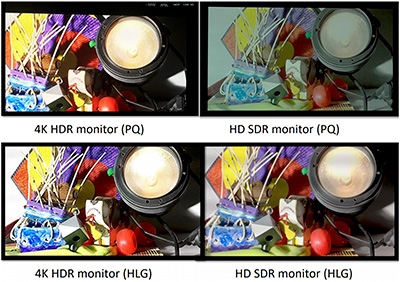

While HDR consists of more than simply brighter pixels, grayscale is the relevant concept here. HDR has two formats: HLG and PQ. HLG (Hybrid Log Gamma) is optimized for broadcast, while PQ is optimized for digital display. Both Final Cut and Premiere support HLG media. But, what does it mean to say “optimized for broadcast?” That’s where this chart comes in.

SDR grayscale values are essentially linear, a “straight line” from 0 IRE (pure black) to 100 IRE (pure white). This range of 100 IRE values is what the entire broadcast signal path is designed to support.

HDR HLG mirrors the linear SDR grayscale values from 0 to 75 IRE – though there is some variation between standards in different countries – then expresses highlights as log values, rather than linear for the top 25% of highlights.

This allows HDR HLG to pack much brighter highlights than SDR, yet still fit within a 100 IRE range. However, there’s a trade-off. While HDR HLG is compatible with broadcast, HDR PQ has more highlight detail. Both HDR formats are much brighter than SDR.

EXTRA CREDIT

If you are creating an HDR project, it is important to know what format your distributor supports BEFORE you do the color grade, because grading HLG and PQ is not the same and you can not switch between them.