… for Codecs & Media

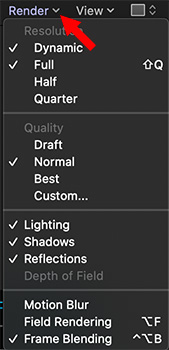

Tip #505: Why HDV Media is a Pain in the Neck

Larry Jordan – LarryJordan.com

Interlacing, non-square pixels, and deep compression make this a challenging media format.

HDV (short for high-definition DV) media was a highly-popular, but deeply flawed, video format around the turn of the century.

DV (Digital Video) ushered in the wide acceptance of portable video cameras (though still standard definition image sizes) and drove the adoption of computer-based video editing.

NOTE: While EMC and Avid led the way in computerized media editing, it was Apple Final Cut Pro’s release in 1999 that converted a technology into a massive consumer force.

HDV was originally developed by JVC and supported by Sony, Canon and Sharp. First released in 2003, it was designed as an affordable recording format for high-definition video.

Their were, however, three big problems with the format:

- It was interlaced

- It used non-square pixels

- It was highly! compressed

If the HDV media was headed to broadcast or for viewing on a TV set, interlacing was no problem. Both distribution technologies fully supported interlacing.

But, if the video was posted to the web, ugly horizontal black lines radiated out from all moving objects. The only way to get rid of them was to deinterlace the media, which, in most cases, resulted in cutting the vertical resolution in half.

In the late 2000’s Sony and other released progressive HDV recording, but the damage to user’s perception of the image was done.

NOTE: 1080i HDV contained 3 times more pixels per field than SD, yet was compressed at the same data rate. (In interlaced media, two fields make a frame.)

The non-square pixels meant that 1080 images were recorded at 1440 pixels horizontally, with the fatter-width pixel filling a full 1920 pixel line. In other words, HDV pixels were short and fat, not square.

As full progressive cameras became popular – especially DSLR cameras with their higher-quality images, HDV gradually faded in popularity. But, even today, we are dealing with legacy HDV media and the image challenges it presents.